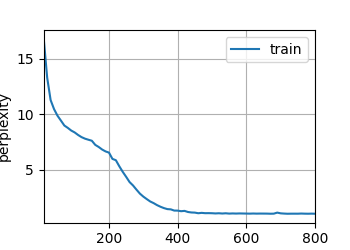

Deep Learning Note 30 循环神经网络(RNN)的从零开始实现

import math import torch from torch import nn from torch.nn import functional as F from d2l import torch as d2l batch_size, num_steps = 32, 35 train_iter, vocab = d2l.load_data_tim...

Python中的*args与**kwargs

**kwargs 和 *args 是 Python 中的两个特殊参数,它们用于函数定义中,允许函数接受任意数量和类型的参数。它们的主要区别在于它们处理参数的方式: *args(可变位置参数): 它允许你将任意数量...

Deep Learning Note 31 RNN的简洁实现

import torch from torch import nn from d2l import torch as d2l from torch.nn import functional as F batch_size, num_steps = 32, 35 train_iter, vocab = d2l.load_data_time_machine(ba...

Deep Learning Note 38 Seq2Seq with Attention

import torch import torch.nn as nn from d2l import torch as d2l class AttentionDecoder(d2l.Decoder): """带有注意力机制的解码器基本接口""" def __init__...

Deep Learning Note 37 注意力评分(Attention Score)

import math import torch from torch import nn from d2l import torch as d2l # 遮掩softmax操作 def masked_softmax(X, valid_lens): """通过最后一个轴上遮蔽元素来执行 sof...

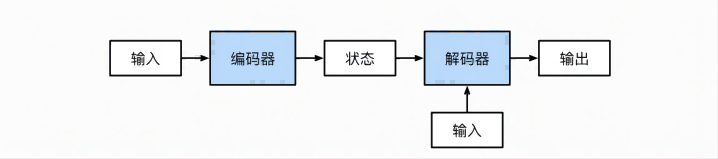

Deep Learning Note 36 Encoder-Decoder架构

架构示意图: Code 这里只有几个抽象类,只是给出了架构,具体需要自己实现 from torch import nn class Encoder(nn.Module): """基本编码器接口""" def __init_...

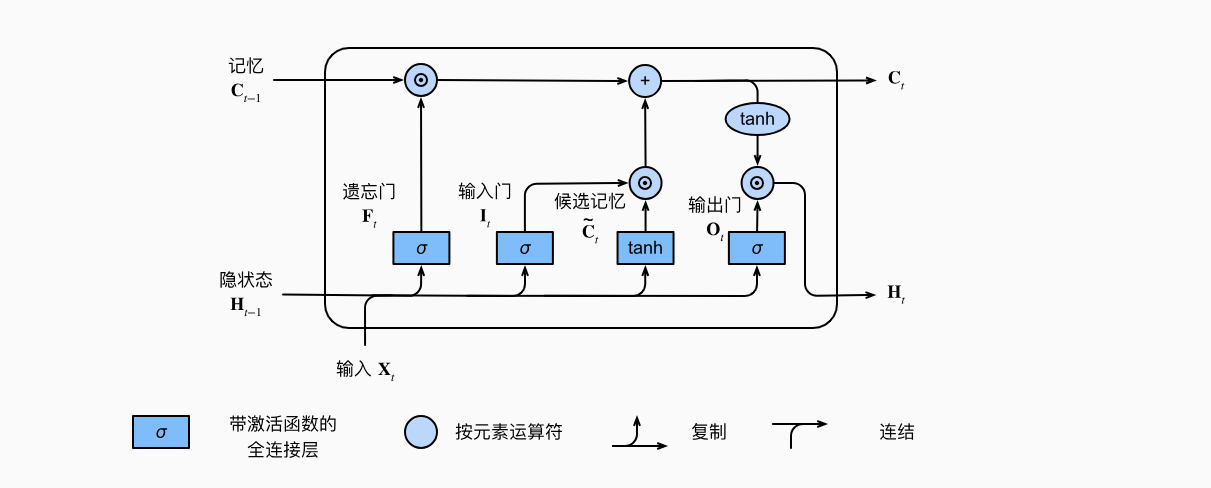

Deep Learning Note 33 LSTM的从零开始实现

李宏毅老师的图: import torch from torch import nn from d2l import torch as d2l batch_size, num_steps = 32, 35 train_iter, vocab = d2l.load_data_time_machine(batch_size, num_steps) ...

Deep Learning Note 32 门控循环单元

门控循环单元实际上是增加了对短期依赖关系和长期依赖关系的权重选择,使得序列预测更可靠 重置门有助于捕获序列中的短期依赖关系 更新门有助于捕获序列中的长期依赖关系 import torch from tor...

Deep Learning Note 34 LSTM的简洁实现

import torch from torch import nn from d2l import torch as d2l batch_size, num_steps = 32, 35 train_iter, vocab = d2l.load_data_time_machine(batch_size, num_steps) vocab_size, num_...

MuQYY

MuQYY